3 ANOVA

3.1 Yesterday

So, yesterday we talked about various things:

- measures of central tendency and of dispersion

- null hypotheses and alternative hypotheses

- a short review of the \(t\) test

Today’s plan is to introduce cases where we have more than independent variable—situations where we have more than one main effect.

In these cases, our \(t\)-test does not work:

t.test(lung_cap ~ smoker + age, data = lungs)Error in t.test.formula(lung_cap ~ smoker + age, data = lungs): ‘formula’ missing or incorrect

Question: Can you think of a reason why we cannot just several \(t\)-tests here? There are at least two:

- one has to do with the kind of data we have here and

- one has to do with a concept from yesterday: power.

This means that we need to talk about more sophisticated tests that let us draw an inference on the basis of multiple factors.

We will also talked about a very central topic in the analysis of (psycho)linguistic data: repeated measures.

In contrast to the lung capacity data we saw yesterday where every person had the lung capacity measured once, in linguistics we will often give participants multiple items of the same condition. This means that we have several data points per person per experimental condition.

And one of our goal for today will be to integrate this design feature into our statistical toolbox.

3.2 How to get to Rome

Before we do so, let me briefly say something about equivalence. Yesterday, we used a very specific function, namely t.test(), to derive our statistical result. However, especially at this simple level where we’re only dealing with a single independent variable, a lot of tests will give us the same result.

Before we move on to the illustration, let me introduce a new data set. This is from an experiment I did with two colleagues of mine: Yuqui Chen and Mailin Antomo (Chen et al. 2022).

Here are two sample items. People saw all items (several hard and soft triggers), but only one condition per item, either the assertion was at-issue or the presupposition.

Soft trigger win

The panda, the duck, and the frog are having a drawing competition. All like the duck’s drawing the most. The duck receives a crown as a prize. The clown did not pay attention again and asks:

- Assertion at-issue: “Did the duck do the best at the competition?”

- Presupposition at-issue: “Did the duck participate in the competition?”

Little Peter responds, “The duck won the competition.”

Hard trigger again

The panda is a member of a soccer club. Recently, he has been playing very well and scored many goals in the past weeks. Yesterday there was a football game and he scored a goal. The clown did not pay attention again and asks:

- Assertion at-issue: “Did the panda score a goal yesterday?”

- Presupposition at-issue: “Did the panda score a goal for the first time yesterday?”

Little Peter responds, “The panda scored a goal again yesterday.”

A sample of our image material for the “win” item.

The (unfortunately very ugly) Likert-type scale from 1 (unacceptable) to 5 (acceptable)

We will only work with a smaller set of data here. As you can see in the code below, I am excluding the children, and one level of the trigger type independent variable (namely non-restrictive relative clauses). And there is some other stuff in the columns that I am dropping here, but this level of complexity should be enough for now.

d_psp <- read_csv(here("assets", "data", "psp-data.csv")) %>%

filter(trigger_cat != "appo", stage != "Children") %>%

select(id, itemid, trigger_cat, issue, judgment)

d_psp## # A tibble: 657 × 5

## id itemid trigger_cat issue judgment

## <chr> <chr> <chr> <chr> <dbl>

## 1 auncqkpvl6b3rp0d3g833vmuh6 gewinnen2 soft at-issue 5

## 2 auncqkpvl6b3rp0d3g833vmuh6 schaffen1 soft at-issue 1

## 3 auncqkpvl6b3rp0d3g833vmuh6 auch3 hard at-issue 1

## 4 auncqkpvl6b3rp0d3g833vmuh6 entdecken1 soft at-issue 5

## 5 auncqkpvl6b3rp0d3g833vmuh6 schaffen3 soft at-issue 1

## 6 auncqkpvl6b3rp0d3g833vmuh6 cleft3 hard non-at-issue 5

## 7 auncqkpvl6b3rp0d3g833vmuh6 wieder1 hard non-at-issue 5

## 8 auncqkpvl6b3rp0d3g833vmuh6 auch2 hard non-at-issue 5

## 9 auncqkpvl6b3rp0d3g833vmuh6 auch1 hard at-issue 1

## 10 auncqkpvl6b3rp0d3g833vmuh6 entdecken2 soft non-at-issue 5

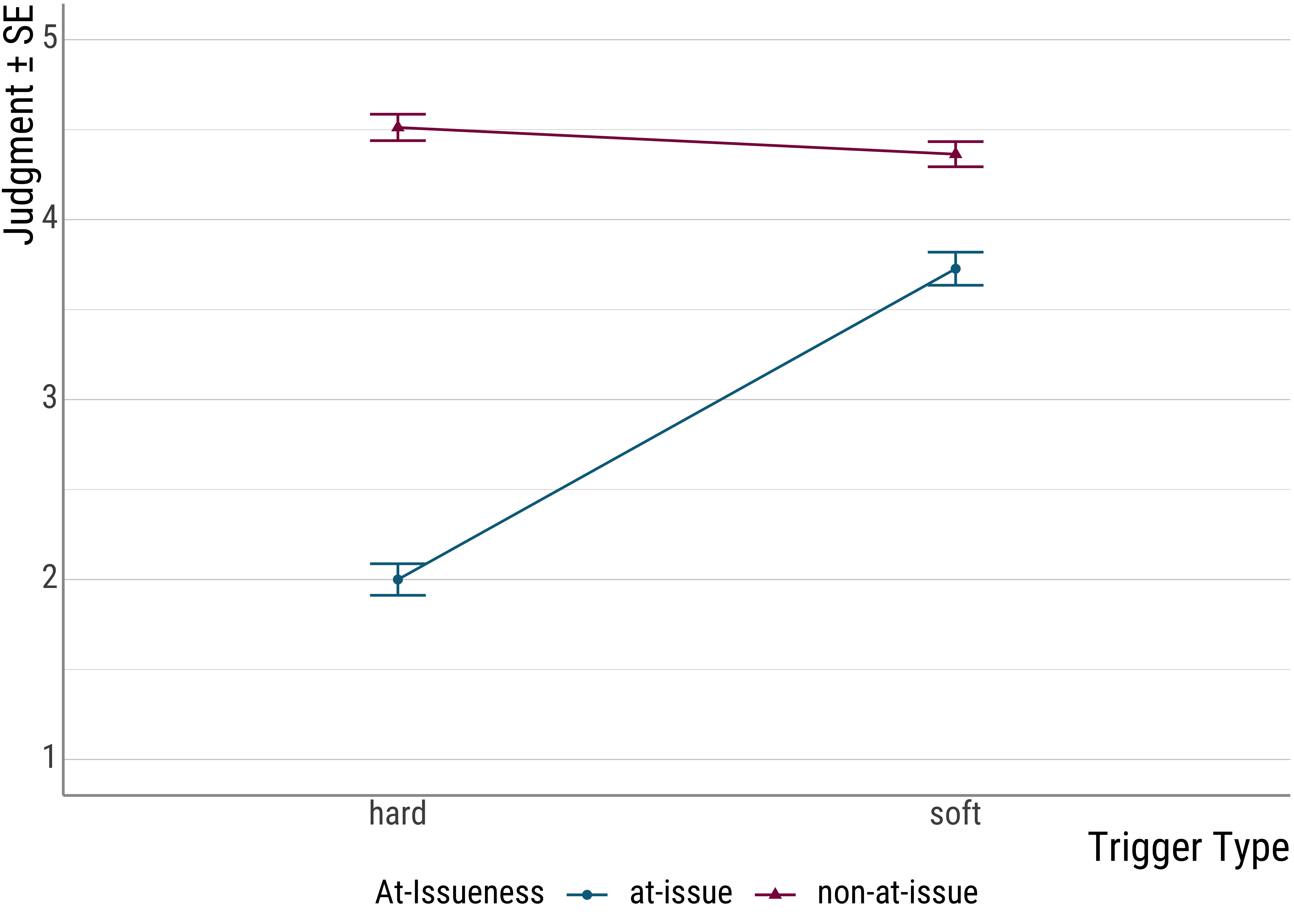

## # … with 647 more rowsHere’s a plot of the data:

Now, here’s the lm() call that gives us the difference betweem the two trigger types (remember that this is equivalent to the \(t\) test):

summary(lm(judgment ~ trigger_cat, data = d_psp))##

## Call:

## lm(formula = judgment ~ trigger_cat, data = d_psp)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.045455 -1.244648 -0.045455 0.954545 1.755352

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 3.244648 0.076661 42.3246 < 0.00000000000000022 ***

## trigger_catsoft 0.800806 0.108168 7.4033 0.0000000000004096 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.3863 on 655 degrees of freedom

## Multiple R-squared: 0.077217, Adjusted R-squared: 0.075808

## F-statistic: 54.809 on 1 and 655 DF, p-value: 0.00000000000040959And here’s one more equivalent command (though you do not see the \(t\) value below, if you look at the results above, you will see the \(F\) value at the very bottom and that the \(p\)-values are the same):

summary(aov(judgment ~ trigger_cat, data = d_psp))## Df Sum Sq Mean Sq F value Pr(>F)

## trigger_cat 1 105.33 105.330 54.809 0.0000000000004096 ***

## Residuals 655 1258.75 1.922

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1So, in this simple case, the following three commands produce identical results: t.test(), lm(), aov().

But what is this aov() command exactly? It stands for the test people commonly call ANOVA (ANalysis Of VAriance). For a long while it was the standard test in linguistics (and related disciplines).

For us, it is important to understand it as an extension of the \(t\)-test: The two models are exactly identical in the simple case with one predictor—for the ANOVA, this is often called one-way ANOVA.

In fact, the same computation we already did for the \(t\)-test will show up again with the ANOVA, only the names for the parts will be different.

Importantly, the ANOVA, but not the \(t\)-test, handles more complex analytical situations as well, namely ones where we want to include several independent variables. And of course, in these situations the equivalence breaks down.

What about the lm()? LM is short for linear model, i.e., a type of analysis that works by fitting a line by minimizing the distance of the actual observations to the line. Again, this line fitting procedure is equivalent to the ANOVA and the \(t\)-test in this case.

set.seed(1234)

sim <- tibble(

a = rnorm(n = 15, mean = 2),

b = rnorm(n = 15, mean = 3),

) %>%

pivot_longer(cols = c(a, b), names_to = "cond")

sim## # A tibble: 30 × 2

## cond value

## <chr> <dbl>

## 1 a 0.793

## 2 b 2.89

## 3 a 2.28

## 4 b 2.49

## 5 a 3.08

## 6 b 2.09

## 7 a -0.346

## 8 b 2.16

## 9 a 2.43

## 10 b 5.42

## # … with 20 more rowst.test(value ~ cond, data = sim, var.eqal = TRUE)##

## Welch Two Sample t-test

##

## data: value by cond

## t = -3.22713, df = 27.9739, p-value = 0.0031812

## alternative hypothesis: true difference in means between group a and group b is not equal to 0

## 95 percent confidence interval:

## -1.76840555 -0.39508234

## sample estimates:

## mean in group a mean in group b

## 1.662703 2.744447summary(lm(value ~ cond, data = sim))##

## Call:

## lm(formula = value ~ cond, data = sim)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.00840 -0.57440 -0.21824 0.56148 2.67139

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 1.66270 0.23702 7.0149 0.0000001251 ***

## condb 1.08174 0.33520 3.2271 0.003179 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.91799 on 28 degrees of freedom

## Multiple R-squared: 0.27111, Adjusted R-squared: 0.24507

## F-statistic: 10.414 on 1 and 28 DF, p-value: 0.0031789summary(aov(value ~ cond, data = sim))## Df Sum Sq Mean Sq F value Pr(>F)

## cond 1 8.7763 8.7763 10.414 0.003179 **

## Residuals 28 23.5959 0.8427

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Notice here that \(t^2 = F: 3.2271^2 = 10.414\). And notice that the coefficient for the slope of cond is the same as the mean difference we find for the \(t\)-test:

t.test(value ~ cond, data = sim) %>%

tidy() %>%

select(1:3)## # A tibble: 1 × 3

## estimate estimate1 estimate2

## <dbl> <dbl> <dbl>

## 1 -1.08 1.66 2.74What is important to remember is this: All of the tests we have discussed so far share a common core, and are actually mathematically identical in the simple case.

With this in mind, let’s abandon the simple case and move on to repeated measures.

3.3 Repeated Measures

As I hinted at at the outset, we ignored a very systematic part of the variance in the model above: repeated measures. This is the situation where we have multiple data points from the same person (each person judged different items in the same condition).

While this type of systematic variance is not necessarily due to our experimental manipulations (more on that a little later when we talk about random slopes), it would be bad to ignore it because it may be a confound in our data. That is, a source of systematic variation in our data that has nothing to do with our experiment.

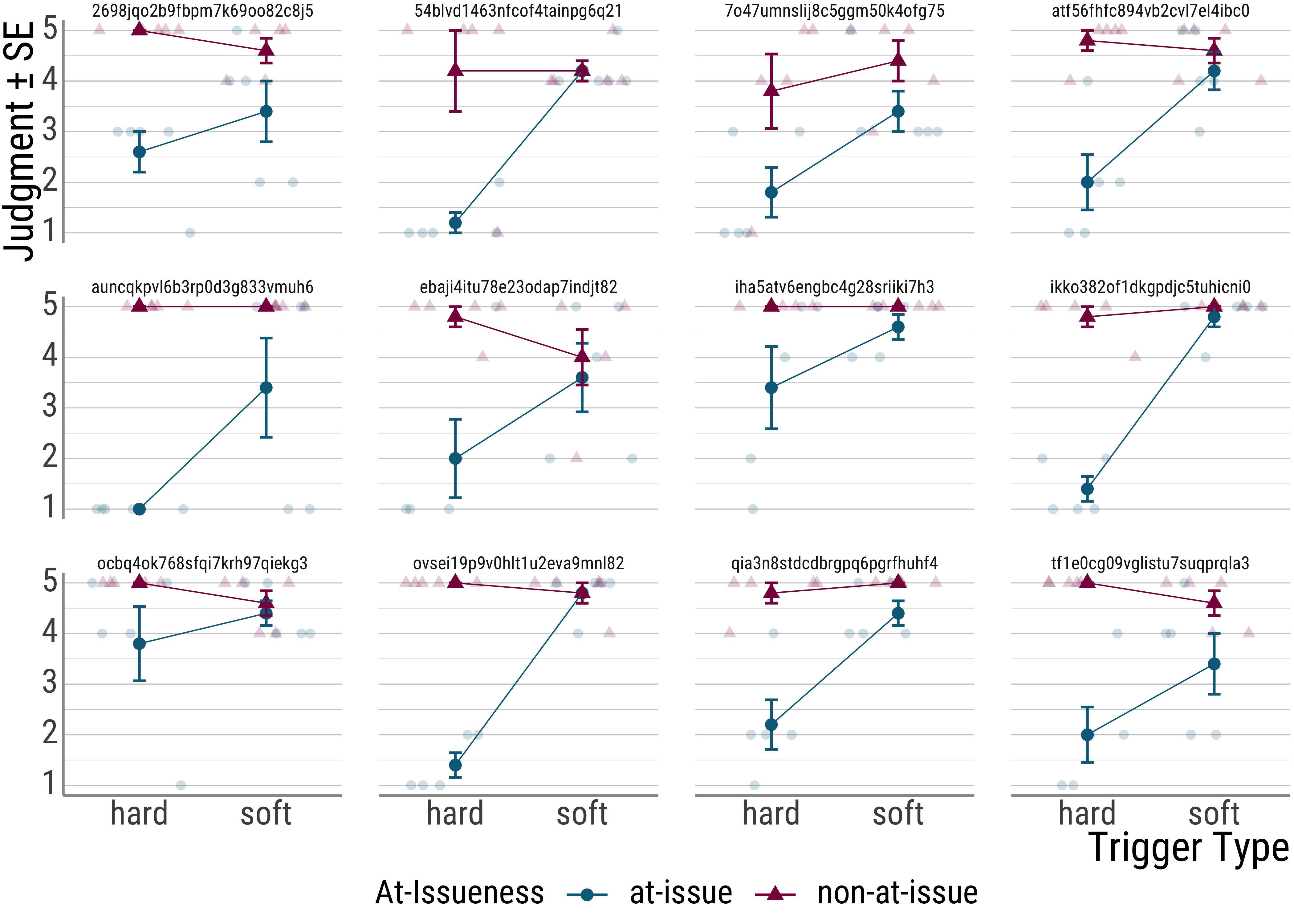

To see this, let’s look at some of the participants in our experiment:

As we can see, though all participants show the overall effect, they differ from each other in quite significant ways. This individual variation is something we would like to account for in our statistics.

As we can see, though all participants show the overall effect, they differ from each other in quite significant ways. This individual variation is something we would like to account for in our statistics.

For the aov function call, it is relatively easy to add repeated measures, simply add the grouping variable (the people) and the factors whose different levels were tested with each of them (trigger type) as an Error() term in your model.

anova_psp <- aov(judgment ~ trigger_cat + Error(id / (trigger_cat)), data = d_psp)

summary(anova_psp)##

## Error: id

## Df Sum Sq Mean Sq F value Pr(>F)

## trigger_cat 1 1.041 1.0408 0.2506 0.6202

## Residuals 31 128.752 4.1533

##

## Error: id:trigger_cat

## Df Sum Sq Mean Sq F value Pr(>F)

## trigger_cat 1 104.954 104.954 48.21 0.00000007297 ***

## Residuals 32 69.665 2.177

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Error: Within

## Df Sum Sq Mean Sq F value Pr(>F)

## Residuals 591 1059.7 1.793We could report this like this:

The predicted main effect of TRIGGER TYPE was significant, \(F(1,31) = 48.21, p < .001\).

A lot of people find the following ANOVA command from the afex package (Singmann et al. 2016) a little bit easier to use, so I am including it here.

library(afex)

afex_psp <- aov_ez(

"id",

"judgment",

within = "trigger_cat",

data = d_psp,

print.formula = TRUE

)

afex_psp## Anova Table (Type 3 tests)

##

## Response: judgment

## Effect df MSE F ges p.value

## 1 trigger_cat 1, 32 0.22 48.54 *** .347 <.001

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '+' 0.1 ' ' 1So, what we’ve done so far:

We moved from the

3.4 Two-Way ANOVA

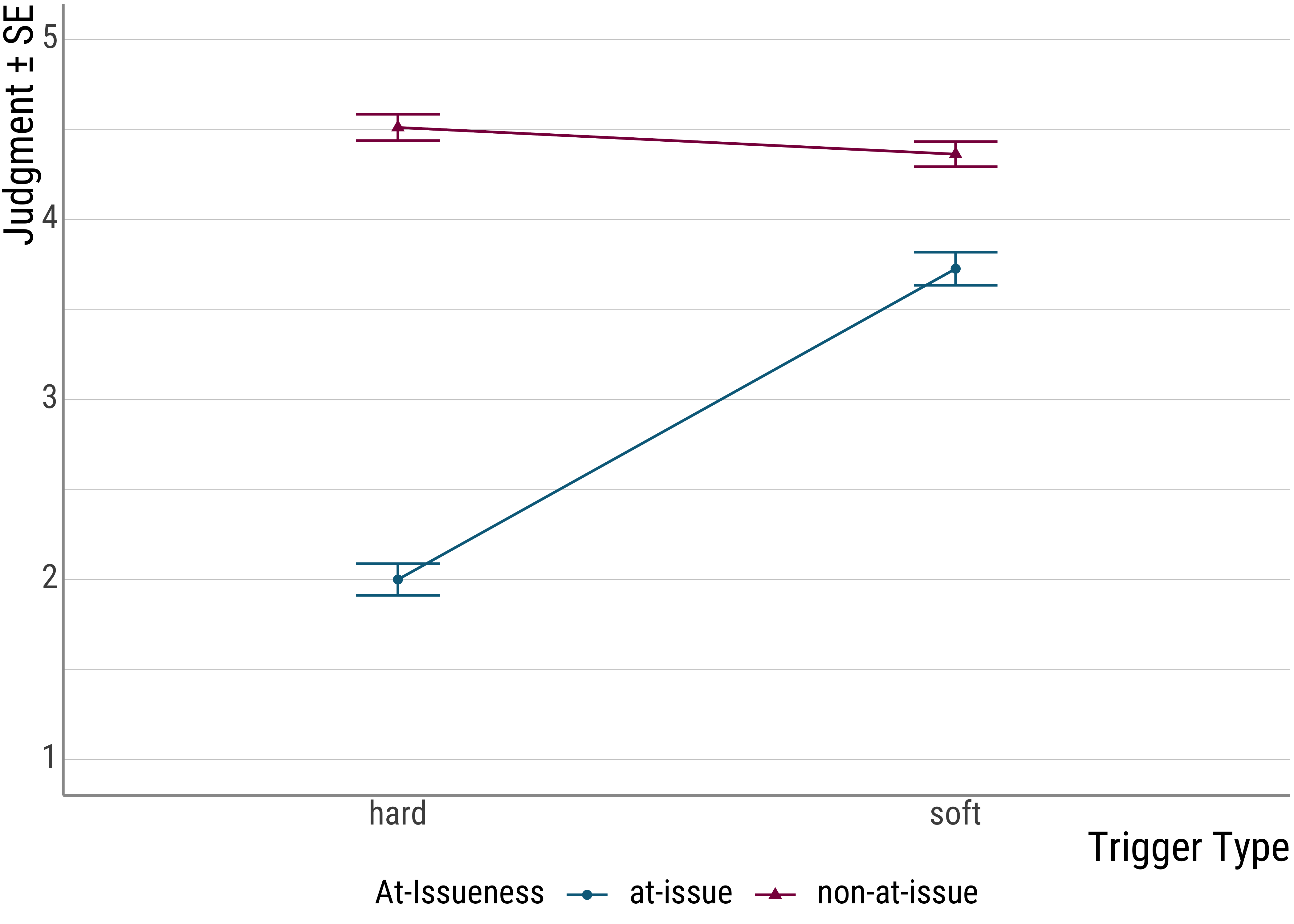

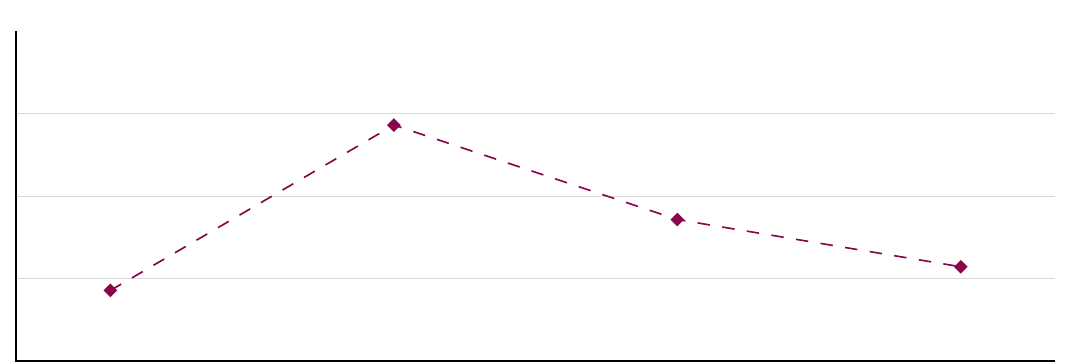

Let’s look at the plot for the presupposition data again:

From the plot (and the original experiment) is set up, you can see that there seems to be another factor at play: at-issueness.

In the critical items, the clowns question either targeted the foregrounded (at-issue) portion of the critical utterance or the backgrounded, presupposed material.

As you can see, this factor seems to be quite important because it drives a quite severe judgment asymmetry for the hard triggers: When the presupposition of a hard trigger is used to answer a question, infelicity ensues. For the soft triggers, this is much less pronounced.

In what is to come, we will incorporate two factors into our model. As we saw before, the \(t\)-test is no longer applicable in these cases, so we will work with the ANOVA for now.

psp_two <- aov_car(judgment ~ trigger_cat + issue + Error(id / (trigger_cat + issue)), data = d_psp)## Warning: More than one observation per design cell, aggregating data using `fun_aggregate = mean`.

## To turn off this warning, pass `fun_aggregate = mean` explicitly.psp_two## Anova Table (Type 3 tests)

##

## Response: judgment

## Effect df MSE F ges p.value

## 1 trigger_cat 1, 32 0.43 47.05 *** .263 <.001

## 2 issue 1, 32 0.29 286.14 *** .589 <.001

## 3 trigger_cat:issue 1, 32 0.25 117.52 *** .337 <.001

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '+' 0.1 ' ' 1The first two lines in the table are our main effects, which say that the trigger type and at-issueness manipulations lead to significantly different judgments.

Question: But what about the third line?

The third line is a so-called interaction. Recall that main effects describe significant differences. Interactions are probably best conceptualized as differences between differences.

As we saw in the plot, the at-issueness factor seems to affect hard presupposition triggers more strongly than soft ones. In a sense, we need to look at the settings of both manipulations in order to predict the judgments that participants are likely to make.

Note that this also affects our main effect for issueness. Before we simply that there is a significant difference between the two levels of this factor. However, with both the plot and the significant interaction under our belt, we now that we should be a little more careful: While there is something going on with at-issueness, the main effect is mainly driven by the hard presupposition triggers, with the soft triggers, the effect is almost negligible.

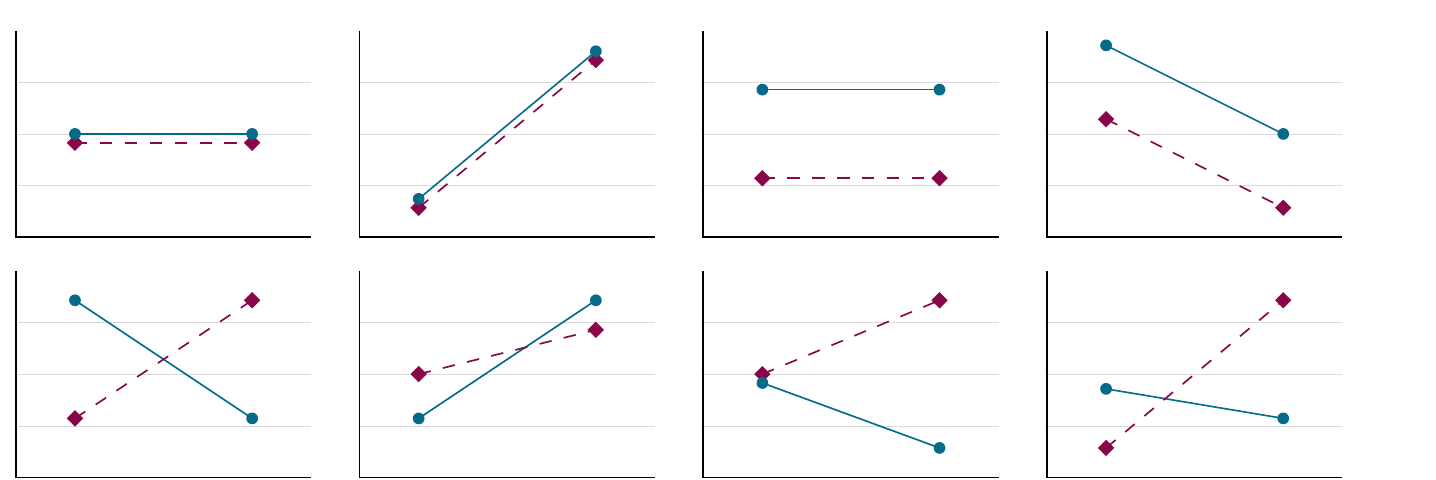

In the plots below, we have some more interactions:

- Above: main effect possibilities without interactions

- below: including interactions – main effects determined by means, interactions by slopes.

- Ltr/ttb: no main effect, main effect for \(x\) factor, split factor main effect, main effects for both, no main effect but interaction, \(x\) factor effect plus interaction, split factor effect plus interaction, two main effects plus interaction.

Figure 3.1: Interactions

3.5 More repeated measures

Question: Is there another repeated measures source in our data? Have a look again and see if you can spot one.

## # A tibble: 657 × 5

## id itemid trigger_cat issue judgment

## <chr> <chr> <chr> <chr> <dbl>

## 1 auncqkpvl6b3rp0d3g833vmuh6 gewinnen2 soft at-issue 5

## 2 auncqkpvl6b3rp0d3g833vmuh6 schaffen1 soft at-issue 1

## 3 auncqkpvl6b3rp0d3g833vmuh6 auch3 hard at-issue 1

## 4 auncqkpvl6b3rp0d3g833vmuh6 entdecken1 soft at-issue 5

## 5 auncqkpvl6b3rp0d3g833vmuh6 schaffen3 soft at-issue 1

## 6 auncqkpvl6b3rp0d3g833vmuh6 cleft3 hard non-at-issue 5

## 7 auncqkpvl6b3rp0d3g833vmuh6 wieder1 hard non-at-issue 5

## 8 auncqkpvl6b3rp0d3g833vmuh6 auch2 hard non-at-issue 5

## 9 auncqkpvl6b3rp0d3g833vmuh6 auch1 hard at-issue 1

## 10 auncqkpvl6b3rp0d3g833vmuh6 entdecken2 soft non-at-issue 5

## # … with 647 more rowsYes, we also have several items for each condition. But how do we deal with this in the context of our ANOVA? We cannot, unfortunately, have both a by-subject ANOVA and a by-items ANOVA at the same time.

So, what people do in these cases is to compute two ANOVAs and report both – often, the by-participants ANOVA is reported as \(F_1\), and the by-items ANOVA as \(F_2\). This then means that for each effect, you would need both of the ANOVAs to yield a significant result.

3.6 ANOVA by hand

In my own first encounter with statistics, I had to do an ANOVA by hand. While at the time I thought it was a little grueling, in the end I thought it was one of the most important excercises.

So, I apologize in advance.

This is the data we will be working with:

d <- tibble(

student = c("Raphael", "Nicolaus", "Balthasar", "Claudia", "Heike", "Marion"),

time1 = c(14.3, 16.2, 17.2, 12.8, 14.9, 11.1),

time2 = c(19.2, 15.9, 17.2, 20.3, 25.3, 12.1),

time3 = c(27.3, 33.5, 42.9, 33.2, 58.3, 39.1)

)

d## # A tibble: 6 × 4

## student time1 time2 time3

## <chr> <dbl> <dbl> <dbl>

## 1 Raphael 14.3 19.2 27.3

## 2 Nicolaus 16.2 15.9 33.5

## 3 Balthasar 17.2 17.2 42.9

## 4 Claudia 12.8 20.3 33.2

## 5 Heike 14.9 25.3 58.3

## 6 Marion 11.1 12.1 39.1As you might know, it often desirable to have the data in what is called the long format (as opposed to the wide one we have up there). Here is a way to achieve that:

d <- d %>%

pivot_longer(

cols = c(time1, time2, time3),

names_to = "when",

values_to = "time"

)

d## # A tibble: 18 × 3

## student when time

## <chr> <chr> <dbl>

## 1 Raphael time1 14.3

## 2 Raphael time2 19.2

## 3 Raphael time3 27.3

## 4 Nicolaus time1 16.2

## 5 Nicolaus time2 15.9

## 6 Nicolaus time3 33.5

## 7 Balthasar time1 17.2

## 8 Balthasar time2 17.2

## 9 Balthasar time3 42.9

## 10 Claudia time1 12.8

## 11 Claudia time2 20.3

## 12 Claudia time3 33.2

## 13 Heike time1 14.9

## 14 Heike time2 25.3

## 15 Heike time3 58.3

## 16 Marion time1 11.1

## 17 Marion time2 12.1

## 18 Marion time3 39.1With the way the data is structured above, we see the repeated measures much more clearly: For each participant, we have several rows, each of which contain one observation.

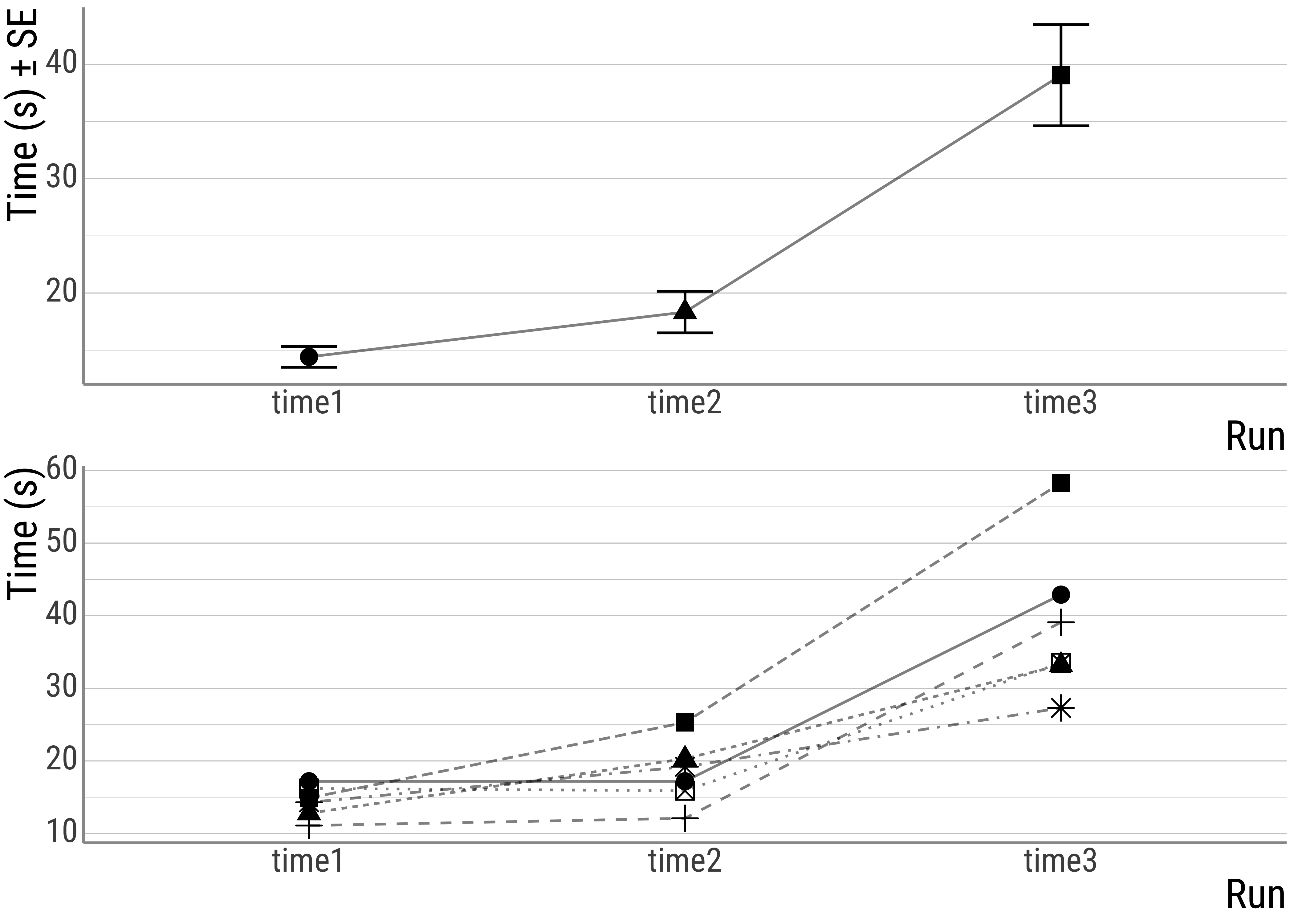

Before we jump into things, here’s a plot:

Figure 3.2: top: overall times, bottom: individual times.

In general, the ANOVA is just like the \(t\)-test in that we divide the systematic variance by the unsystematic variance. You already know that the statistic that underlies the ANOVA is the \(F\) value:

\[F = \frac{MS_{model}}{MS_{error}}\] And the general intuition is this: We can divide the variance in a data set into these two portions in the equation above:

\[s^2_{total} = s^2_{model} + s^2_{error}\] As the first step, we want the total sum of squares, where \(i\) is the index of the factor levels and \(y_{im}\) is the single observation of one participant under a certain factor level:

\[QS_{total} = \sum\limits_{i}\sum\limits_{m} (y_{im} - \bar{x})^2\]

Now, we want the variance that the factors contribute to the total variance. \(i\) is again the index for the factor levels and \(n\) is the number of observations for that factor level:

\[QS_{model} = \sum\limits_{i} n \cdot (\bar{x}_i - \bar{x})^2\]

Next step: We need the source of random variation, which is represented by the difference of the single observations from the group mean (\(y_{mi}\) is the value of the participant \(m\) measured at factor level \(i\):

\[QS_{error} = \sum\limits_{i}\sum\limits_{m} (y_{im} - \bar{x}_i)^2\] As with the \(t\)-test, we also need the degrees of freedom, where \(N\) is the total number of observations, \(p\) is the number of factor levels:

\[df_{QS_{total}} = N - 1\] \[df_{QS_{model}} = p - 1\] \[df_{QS_{error}} = N - p\]

For the final step, we need to account for the fact the we used more observations for the \(QS_{error}\) (18) than we did for the \(QS_{model}\) (3), so we need to normalize the sums of squares by dividing them by the corresponding degrees of freedom, which gives us the mean squares:

\[MS_{error} = \frac{QS_{error}}{df_{error}}\]

\[MS_{model} = \frac{QS_{model}}{df_{model}}\] And then we have everything to do the final computation:

\[F = \frac{MS_{model}}{MS_{error}}\]

Now, we need the grand mean and the condition means for each time point:

d <- d %>%

mutate(

grand_mean = mean(time),

mean_run1 = mean(d$time[d$when == "time1"]),

mean_run2 = mean(d$time[d$when == "time2"]),

mean_run3 = mean(d$time[d$when == "time3"])

)

d## # A tibble: 18 × 7

## student when time grand_mean mean_run1 mean_run2 mean_run3

## <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 Raphael time1 14.3 23.9 14.4 18.3 39.0

## 2 Raphael time2 19.2 23.9 14.4 18.3 39.0

## 3 Raphael time3 27.3 23.9 14.4 18.3 39.0

## 4 Nicolaus time1 16.2 23.9 14.4 18.3 39.0

## 5 Nicolaus time2 15.9 23.9 14.4 18.3 39.0

## 6 Nicolaus time3 33.5 23.9 14.4 18.3 39.0

## 7 Balthasar time1 17.2 23.9 14.4 18.3 39.0

## 8 Balthasar time2 17.2 23.9 14.4 18.3 39.0

## 9 Balthasar time3 42.9 23.9 14.4 18.3 39.0

## 10 Claudia time1 12.8 23.9 14.4 18.3 39.0

## 11 Claudia time2 20.3 23.9 14.4 18.3 39.0

## 12 Claudia time3 33.2 23.9 14.4 18.3 39.0

## 13 Heike time1 14.9 23.9 14.4 18.3 39.0

## 14 Heike time2 25.3 23.9 14.4 18.3 39.0

## 15 Heike time3 58.3 23.9 14.4 18.3 39.0

## 16 Marion time1 11.1 23.9 14.4 18.3 39.0

## 17 Marion time2 12.1 23.9 14.4 18.3 39.0

## 18 Marion time3 39.1 23.9 14.4 18.3 39.0Next steps: We want the square sum of errors for the entire model. Lets do this step by step:

d <- d %>%

mutate(

grand_mean_diff = (time - grand_mean)^2,

sum_sq_total = sum(grand_mean_diff),

) %>%

mutate(

# differences for each factor level

# grand mean differences

diff_time1 = mean_run1 - grand_mean,

diff_time2 = mean_run2 - grand_mean,

diff_time3 = mean_run3 - grand_mean,

# square the differences and multiply by n

Diff_time1 = (diff_time1^2) * 6,

Diff_time2 = (diff_time2^2) * 6,

Diff_time3 = (diff_time3^2) * 6,

# and add them all up

sum_sq_model = Diff_time1 + Diff_time2 + Diff_time3

)

d## # A tibble: 18 × 16

## student when time grand_mean mean_run1 mean_run2 mean_run3 grand_mean_diff sum_sq_total diff_time1 diff_time2

## <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 Raphael time1 14.3 23.9 14.4 18.3 39.0 92.8 2815. -9.52 -5.6

## 2 Raphael time2 19.2 23.9 14.4 18.3 39.0 22.4 2815. -9.52 -5.6

## 3 Raphael time3 27.3 23.9 14.4 18.3 39.0 11.3 2815. -9.52 -5.6

## 4 Nicolaus time1 16.2 23.9 14.4 18.3 39.0 59.8 2815. -9.52 -5.6

## 5 Nicolaus time2 15.9 23.9 14.4 18.3 39.0 64.5 2815. -9.52 -5.6

## 6 Nicolaus time3 33.5 23.9 14.4 18.3 39.0 91.5 2815. -9.52 -5.6

## 7 Balthasar time1 17.2 23.9 14.4 18.3 39.0 45.3 2815. -9.52 -5.6

## 8 Balthasar time2 17.2 23.9 14.4 18.3 39.0 45.3 2815. -9.52 -5.6

## 9 Balthasar time3 42.9 23.9 14.4 18.3 39.0 360. 2815. -9.52 -5.6

## 10 Claudia time1 12.8 23.9 14.4 18.3 39.0 124. 2815. -9.52 -5.6

## 11 Claudia time2 20.3 23.9 14.4 18.3 39.0 13.2 2815. -9.52 -5.6

## 12 Claudia time3 33.2 23.9 14.4 18.3 39.0 85.9 2815. -9.52 -5.6

## 13 Heike time1 14.9 23.9 14.4 18.3 39.0 81.6 2815. -9.52 -5.6

## 14 Heike time2 25.3 23.9 14.4 18.3 39.0 1.87 2815. -9.52 -5.6

## 15 Heike time3 58.3 23.9 14.4 18.3 39.0 1181. 2815. -9.52 -5.6

## 16 Marion time1 11.1 23.9 14.4 18.3 39.0 165. 2815. -9.52 -5.6

## 17 Marion time2 12.1 23.9 14.4 18.3 39.0 140. 2815. -9.52 -5.6

## 18 Marion time3 39.1 23.9 14.4 18.3 39.0 230. 2815. -9.52 -5.6

## diff_time3 Diff_time1 Diff_time2 Diff_time3 sum_sq_model

## <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 15.1 543. 188. 1371. 2103.

## 2 15.1 543. 188. 1371. 2103.

## 3 15.1 543. 188. 1371. 2103.

## 4 15.1 543. 188. 1371. 2103.

## 5 15.1 543. 188. 1371. 2103.

## 6 15.1 543. 188. 1371. 2103.

## 7 15.1 543. 188. 1371. 2103.

## 8 15.1 543. 188. 1371. 2103.

## 9 15.1 543. 188. 1371. 2103.

## 10 15.1 543. 188. 1371. 2103.

## 11 15.1 543. 188. 1371. 2103.

## 12 15.1 543. 188. 1371. 2103.

## 13 15.1 543. 188. 1371. 2103.

## 14 15.1 543. 188. 1371. 2103.

## 15 15.1 543. 188. 1371. 2103.

## 16 15.1 543. 188. 1371. 2103.

## 17 15.1 543. 188. 1371. 2103.

## 18 15.1 543. 188. 1371. 2103.And now we need the square sum of the errors (sorry for switching to base R here, I have not found a satisfying way of doing this with dplyr). Again, we want to see how far the individual times deviate from the the respective condition means:

d$error_diff[d$when == "time1"] <- (d$time[d$when == "time1"] - d$mean_run1[d$when == "time1"])^2## Warning: Unknown or uninitialised column: `error_diff`.d$error_diff[d$when == "time2"] <- (d$time[d$when == "time2"] - d$mean_run2[d$when == "time2"])^2

d$error_diff[d$when == "time3"] <- (d$time[d$when == "time3"] - d$mean_run3[d$when == "time3"])^2

d$sum_sq_error <- sum(d$error_diff)Now let’s check whether the total sum of squares is made up of the sums of squares of the model and the errors:

mean(d$sum_sq_total) == mean(d$sum_sq_model) + mean(d$sum_sq_error)## [1] TRUENext step: degrees of freedom.

d <- d %>%

mutate(

df_total = length(time) - 1,

df_model = length(unique(when)) - 1,

df_error = length(time) - length(unique(when))

)

d## # A tibble: 18 × 21

## student when time grand_mean mean_run1 mean_run2 mean_run3 grand_mean_diff sum_sq_total diff_time1 diff_time2

## <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 Raphael time1 14.3 23.9 14.4 18.3 39.0 92.8 2815. -9.52 -5.6

## 2 Raphael time2 19.2 23.9 14.4 18.3 39.0 22.4 2815. -9.52 -5.6

## 3 Raphael time3 27.3 23.9 14.4 18.3 39.0 11.3 2815. -9.52 -5.6

## 4 Nicolaus time1 16.2 23.9 14.4 18.3 39.0 59.8 2815. -9.52 -5.6

## 5 Nicolaus time2 15.9 23.9 14.4 18.3 39.0 64.5 2815. -9.52 -5.6

## 6 Nicolaus time3 33.5 23.9 14.4 18.3 39.0 91.5 2815. -9.52 -5.6

## 7 Balthasar time1 17.2 23.9 14.4 18.3 39.0 45.3 2815. -9.52 -5.6

## 8 Balthasar time2 17.2 23.9 14.4 18.3 39.0 45.3 2815. -9.52 -5.6

## 9 Balthasar time3 42.9 23.9 14.4 18.3 39.0 360. 2815. -9.52 -5.6

## 10 Claudia time1 12.8 23.9 14.4 18.3 39.0 124. 2815. -9.52 -5.6

## 11 Claudia time2 20.3 23.9 14.4 18.3 39.0 13.2 2815. -9.52 -5.6

## 12 Claudia time3 33.2 23.9 14.4 18.3 39.0 85.9 2815. -9.52 -5.6

## 13 Heike time1 14.9 23.9 14.4 18.3 39.0 81.6 2815. -9.52 -5.6

## 14 Heike time2 25.3 23.9 14.4 18.3 39.0 1.87 2815. -9.52 -5.6

## 15 Heike time3 58.3 23.9 14.4 18.3 39.0 1181. 2815. -9.52 -5.6

## 16 Marion time1 11.1 23.9 14.4 18.3 39.0 165. 2815. -9.52 -5.6

## 17 Marion time2 12.1 23.9 14.4 18.3 39.0 140. 2815. -9.52 -5.6

## 18 Marion time3 39.1 23.9 14.4 18.3 39.0 230. 2815. -9.52 -5.6

## diff_time3 Diff_time1 Diff_time2 Diff_time3 sum_sq_model error_diff sum_sq_error df_total df_model df_error

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <int>

## 1 15.1 543. 188. 1371. 2103. 0.0136 712. 17 2 15

## 2 15.1 543. 188. 1371. 2103. 0.751 712. 17 2 15

## 3 15.1 543. 188. 1371. 2103. 138. 712. 17 2 15

## 4 15.1 543. 188. 1371. 2103. 3.18 712. 17 2 15

## 5 15.1 543. 188. 1371. 2103. 5.92 712. 17 2 15

## 6 15.1 543. 188. 1371. 2103. 30.8 712. 17 2 15

## 7 15.1 543. 188. 1371. 2103. 7.75 712. 17 2 15

## 8 15.1 543. 188. 1371. 2103. 1.28 712. 17 2 15

## 9 15.1 543. 188. 1371. 2103. 14.8 712. 17 2 15

## 10 15.1 543. 188. 1371. 2103. 2.61 712. 17 2 15

## 11 15.1 543. 188. 1371. 2103. 3.87 712. 17 2 15

## 12 15.1 543. 188. 1371. 2103. 34.2 712. 17 2 15

## 13 15.1 543. 188. 1371. 2103. 0.234 712. 17 2 15

## 14 15.1 543. 188. 1371. 2103. 48.5 712. 17 2 15

## 15 15.1 543. 188. 1371. 2103. 371. 712. 17 2 15

## 16 15.1 543. 188. 1371. 2103. 11.0 712. 17 2 15

## 17 15.1 543. 188. 1371. 2103. 38.9 712. 17 2 15

## 18 15.1 543. 188. 1371. 2103. 0.00250 712. 17 2 15# sanity check here as well:

d$df_total == d$df_model + d$df_error## [1] TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUEAnd now we get to the meat of the ANOVA: the mean sums of squares

d <- d %>%

mutate(

mean_sum_squares_model = mean(sum_sq_model) / df_model,

mean_sum_squares_error = mean(sum_sq_error) / df_error

)

d## # A tibble: 18 × 23

## student when time grand_mean mean_run1 mean_run2 mean_run3 grand_mean_diff sum_sq_total diff_time1 diff_time2

## <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 Raphael time1 14.3 23.9 14.4 18.3 39.0 92.8 2815. -9.52 -5.6

## 2 Raphael time2 19.2 23.9 14.4 18.3 39.0 22.4 2815. -9.52 -5.6

## 3 Raphael time3 27.3 23.9 14.4 18.3 39.0 11.3 2815. -9.52 -5.6

## 4 Nicolaus time1 16.2 23.9 14.4 18.3 39.0 59.8 2815. -9.52 -5.6

## 5 Nicolaus time2 15.9 23.9 14.4 18.3 39.0 64.5 2815. -9.52 -5.6

## 6 Nicolaus time3 33.5 23.9 14.4 18.3 39.0 91.5 2815. -9.52 -5.6

## 7 Balthasar time1 17.2 23.9 14.4 18.3 39.0 45.3 2815. -9.52 -5.6

## 8 Balthasar time2 17.2 23.9 14.4 18.3 39.0 45.3 2815. -9.52 -5.6

## 9 Balthasar time3 42.9 23.9 14.4 18.3 39.0 360. 2815. -9.52 -5.6

## 10 Claudia time1 12.8 23.9 14.4 18.3 39.0 124. 2815. -9.52 -5.6

## 11 Claudia time2 20.3 23.9 14.4 18.3 39.0 13.2 2815. -9.52 -5.6

## 12 Claudia time3 33.2 23.9 14.4 18.3 39.0 85.9 2815. -9.52 -5.6

## 13 Heike time1 14.9 23.9 14.4 18.3 39.0 81.6 2815. -9.52 -5.6

## 14 Heike time2 25.3 23.9 14.4 18.3 39.0 1.87 2815. -9.52 -5.6

## 15 Heike time3 58.3 23.9 14.4 18.3 39.0 1181. 2815. -9.52 -5.6

## 16 Marion time1 11.1 23.9 14.4 18.3 39.0 165. 2815. -9.52 -5.6

## 17 Marion time2 12.1 23.9 14.4 18.3 39.0 140. 2815. -9.52 -5.6

## 18 Marion time3 39.1 23.9 14.4 18.3 39.0 230. 2815. -9.52 -5.6

## diff_time3 Diff_time1 Diff_time2 Diff_time3 sum_sq_model error_diff sum_sq_error df_total df_model df_error

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <int>

## 1 15.1 543. 188. 1371. 2103. 0.0136 712. 17 2 15

## 2 15.1 543. 188. 1371. 2103. 0.751 712. 17 2 15

## 3 15.1 543. 188. 1371. 2103. 138. 712. 17 2 15

## 4 15.1 543. 188. 1371. 2103. 3.18 712. 17 2 15

## 5 15.1 543. 188. 1371. 2103. 5.92 712. 17 2 15

## 6 15.1 543. 188. 1371. 2103. 30.8 712. 17 2 15

## 7 15.1 543. 188. 1371. 2103. 7.75 712. 17 2 15

## 8 15.1 543. 188. 1371. 2103. 1.28 712. 17 2 15

## 9 15.1 543. 188. 1371. 2103. 14.8 712. 17 2 15

## 10 15.1 543. 188. 1371. 2103. 2.61 712. 17 2 15

## 11 15.1 543. 188. 1371. 2103. 3.87 712. 17 2 15

## 12 15.1 543. 188. 1371. 2103. 34.2 712. 17 2 15

## 13 15.1 543. 188. 1371. 2103. 0.234 712. 17 2 15

## 14 15.1 543. 188. 1371. 2103. 48.5 712. 17 2 15

## 15 15.1 543. 188. 1371. 2103. 371. 712. 17 2 15

## 16 15.1 543. 188. 1371. 2103. 11.0 712. 17 2 15

## 17 15.1 543. 188. 1371. 2103. 38.9 712. 17 2 15

## 18 15.1 543. 188. 1371. 2103. 0.00250 712. 17 2 15

## mean_sum_squares_model mean_sum_squares_error

## <dbl> <dbl>

## 1 1051. 47.5

## 2 1051. 47.5

## 3 1051. 47.5

## 4 1051. 47.5

## 5 1051. 47.5

## 6 1051. 47.5

## 7 1051. 47.5

## 8 1051. 47.5

## 9 1051. 47.5

## 10 1051. 47.5

## 11 1051. 47.5

## 12 1051. 47.5

## 13 1051. 47.5

## 14 1051. 47.5

## 15 1051. 47.5

## 16 1051. 47.5

## 17 1051. 47.5

## 18 1051. 47.5Finally, by diving systematic variance (contributed by our experimental manipulation) by unsystematic variance (contributed by randomness), we get our \(F\) value:

f_value <- mean(d$mean_sum_squares_model) / mean(d$mean_sum_squares_error)

f_value## [1] 22.133813And now let’s see whether R comes up with the same results:

summary(aov(time ~ when, data = d))## Df Sum Sq Mean Sq F value Pr(>F)

## when 2 2102.64 1051.3 22.134 0.00003346 ***

## Residuals 15 712.48 47.5

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1summary(lm(time ~ when, data = d))##

## Call:

## lm(formula = time ~ when, data = d)

##

## Residuals:

## Min 1Q Median 3Q Max

## -11.75000 -3.09583 -0.03333 1.92083 19.25000

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 14.4167 2.8136 5.1239 0.0001247 ***

## whentime2 3.9167 3.9790 0.9843 0.3405686

## whentime3 24.6333 3.9790 6.1908 0.00001728 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 6.8919 on 15 degrees of freedom

## Multiple R-squared: 0.74691, Adjusted R-squared: 0.71317

## F-statistic: 22.134 on 2 and 15 DF, p-value: 0.000033462Question: Why can we not use the t.test() here?

3.7 Interpreting an ANOVA

And now let’s talk about interpretation:

Here is a situation where we have one independent variable (our model would be of the one-factor kind) but it has 4 levels:

Figure 3.3: One factorial design (four factor levels).

Question: Suppose that our one-way ANOVA indicates that we have a main effect in the situation above. What does that mean? How would you interpret the main effect?

3.8 Wrap-Up

Today we talked about the common core between a number of statistical tests. In particular, we saw that the t-test, the ANOVA, and the LM are equivalent in the simple, one-factor-two-levels cases (without repeated measures).

So, in fact, while we talked about different tests, you actually learned one statistical approach: line fitting by minimizing variance.

Then we talked about repeated measures, as a systematic portion of variance that is unrelated to our experimental manipulation. Instead, it is systematically associated with a random portion of our experiments, namely the sampling process.

Importantly, in psycholinguistics we often have two instances of repeated measures: the participants and the items. We saw a way of dealing with this by computing two ANOVAs. But this is not very satisfying since we would like a model that can deal with both repeated measures components at the same time. We will see one at the end of the class.

Finally, we saw how to compute a two-way ANOVA, namely a model where we are interested in two factors at the same time (as well as their interaction).